What is DopNet?

DopNet is a large Radar database organised in a hierarchy in which each node represents the data of a person which is divided into different gestures recorded from that person. The data here was measured with FMCW and CW Radars.

DopNet‘s structure makes it a useful tool for Machine Learning Gesture Recognition software and Image Processing for the spectrograms.

The shared data was generated by Dr. Matthew Ritchie (University College London (UCL), London, United Kingdom) and Richard Capraru (Nanyang Technological University (NTU) and Singapore Agency for Science, Technology and Research (A*STAR), Singapore) within the UCL Radar Research Group in collaboration with Dr. Francesco Fioranelli (Delft University of Technology (TU Delft), Delft, Netherlands). Furthermore, it started as a Laidlaw Scholarship project.

How do I get hold of the data?

The Data is available to universities and scientific bodies for use in their research. It can be obtained here: Data Information and Download Links

If you want to read Publications and Articles relating the Micro-Doppler Gesture Recognition or Radar Research, they are available in the Publications and Articles Page. If you make a publication that uses DopNet or the Data, and you want to add it on the Publications and Articles Page, contact us.

Radar & Micro-Doppler

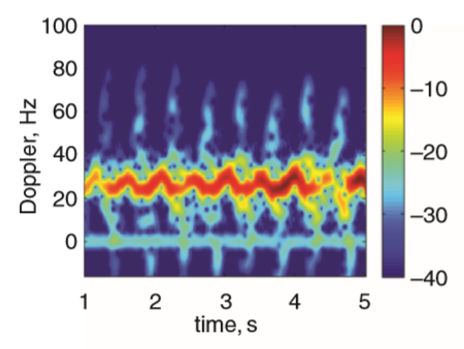

Radar sensors have previously been successfully used to classify different actions such as walking, carrying an item, discriminating between people and animals gaits or drones and bird targets [2-6]. All of this analysis used the phenomenon called Micro-Doppler which is the additional movements a target has on top of its bulk velocity. For example, a person may walk forwards at 3 m/s but as they move at this speed their arms and legs oscillate back and forth. This movement creates a signature which was coined as Micro-Doppler by researcher V. Chen [7]. The image below shows an example plot of a micro-Doppler signature of a person walking towards a radar.

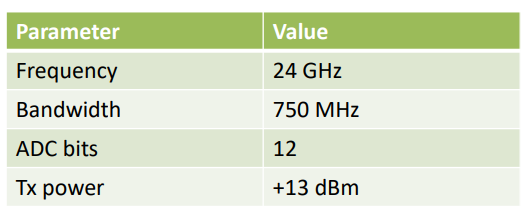

A typical short-range radar system architecture is the Frequency Modulated Continuous Wave (FMCW) radar. This constantly transmits a Chirp, which is a signal that increases (up-chirp) or decreases (down-chirp) its frequency linearly with time. This is then mixed with the received signal in order to obtain the range/Doppler of a target. The data generated for this classification challenge was created using an Ancortek 24 GHz FMCW radar a 750 MHz bandwidth, more details about the radar system can be seen in the table below:

The focus on this data challenge is the classification of 4 separate hand gestures. There has been a vast amount of research into various technologies used as human-machine interfaces (HMI). This includes the Microsoft Kinetic Sensor, Virtual Reality wand controllers and even sensors that read your brainwaves. Recently Google has developed a small radar sensor call Soli which is proposed as a device for gesture recognition [1]. This research challenge proposes the use of a compact Radar sensor as a device that can be used in HMI and has encouraged researchers to investigate the feasibility of a radar device in this roll.

Some examples of gestures are Wave / Pinch / Click / Swipe.

Radar sensors have previously been successfully used to classify different actions such as walking, carrying an item, discriminating between people and animals gaits or drones and bird targets. All of this analysis used the phenomenon called Micro-Doppler which is the additional movements a target has on top of its bulk velocity. For example, a person may walk forwards at 3 m/s but as they move at this speed their arms and legs oscillate back and forth. This movement creates a signature which was coined as Micro-Doppler by researcher V. Chen. The image below shows an example plot of a micro-Doppler signature of a person walking towards a radar.

In order to evaluate how effective a radar is in recognising gestures, this challenge provides data that can be used to apply classification methods. A database of gestures has been created and uploaded here using the Ancortek Radar system. This database includes signals from 4 different types (Wave / Pinch / Click / Swipe). The data itself has been pre-processed so that the signatures have been cut into individual actions from a long data stream, filtered to enhance the desired components and processed to produce the Doppler vs. time-domain data. The data is then stored in this format in order for it to be read in, features to be extracted and the classification process to be performed.

The Ancortek Radar system used to generate the dataset is a 24 GHz FMCW radar that has a standalone GUI to control and capture data or can be commanded within a Matlab interface to capture signals. The system we have used has one transmit antenna and two receive antennas (only one was used for the purposes of this dataset). It was set up on a lab bench at the same height as the gesture action. It was then initiated to capture 30 seconds of data and the candidate repeated the actions numerous times within this window. Afterwards, the raw data was then cut into individual gestures that occurred over the whole period. These individual gesture actions have varying matrix sizes hence a cell data format was used to create a ragged data cube. The data that has been shared as part of this challenge was created by the following flow of processing:

- De-interleave Channel 1 -2 and I/Q samples

- Break vector of samples into a 2D matrix of Chirp vs. Time.

- FFT samples to covert to range domain. Resulting in a Range vs. Time matrix (RTI)

- Filter signal such that static targets are suppressed and moving targets are highlighted. This is called MTI filtering in radar signal processing.

- Extract rows within the RTI that contain the gesture movement and coherently sum these.

- Generate a Doppler vs. Time 2D matrix by using a Short Time Fourier Transform on the vector of selected samples

- Store the complex samples of the Doppler vs. Time matrix within a larger cell array which is a data cube of the N repeats of the 4 gestures from each person.

Example of a Doppler vs. Time matrix for each gesture can be seen below:

.png?raw=true)

By eye, it is clear that these gestures look different from each other. The waving gesture which has the oscillatory shape and longer duration, whereas the click gesture happens over the shortest time frame (as a click is only a short sharp action). Then the pinch and swipe actions do show some level of similarity which could make them challenging for a classifier.

The training data that we share is a matrix of Doppler vs time signals is stored in a cell format. This is a labelled dataset that can be used to create a classifier model. A separate Matlab .m file is shared to show users how to read this data. Within the whole database, there are 3052 files from 6 different people.

UCL Radar Research Group

More detail on the UCL radar group can be found here Radar Group Wiki

Along with the YouTube channel showing some technical seminars from researchers within the group: Radar Group YouTube Channel

Acknowledgements

The data provided on this competition is freely available to the public with the complex data being available, upon request, for use by universities or scientific bodies. Participants shall use the Database only for non-commercial research and educational purposes. You are required to acknowledge UCL and the DopNet dataset in any publications or media that you use the data for.

The authors would like the thank Colin Horne, Dr. Riccardo Palama’, Alvaro Arenas Pingarron and Folker Hoffman for their support in data collection for this research.

References

[1] https://atap.google.com/soli/

[2] F. Fioranelli, M. Ritchie, and H. Griffiths, “Centroid features for classification of armed/unarmed multiple personnel using multistatic human micro-Doppler,” IET Radar, Sonar Navig., vol. 10, no. 9, 2016.

[3] F. Fioranelli, M. Ritchie, S. Z. Gürbüz, and H. Griffiths, “Feature Diversity for Optimized Human Micro-Doppler Classification Using Multistatic Radar,” IEEE Trans. Aerosp. Electron. Syst., vol. 53, no. 2, 2017.

[4] D. Tahmoush and J. Silvious, “Remote detection of humans and animals,” Proc. - Appl. Imag. Pattern Recognit. Work., pp. 1–8, 2009. https://ieeexplore.ieee.org/document/5466303

[5] F. Fioranelli, M. Ritchie, and H. Griffiths, “Multistatic human micro-Doppler classification of armed/unarmed personnel,” IET Radar, Sonar Navig., vol. 9, no. 7, pp. 857 865, 2015.

[6] M. Ritchie, F, Fioranelli, H. Borrion, H. Griffiths, “Multistatic Micro-Doppler Radar Features for Classification of Unloaded/Loaded Micro-Drones”, vol 11, 1, IET RSN, Jan 2017.

[7] Chen, V.C., Fayin, L., Ho, S.S., Wechsler, H.: ‘Micro-Doppler effect in radar: phenomenon, model, and simulation study’, IEEE Trans. Aerosp. Electron. Syst., 2006, 42, (1), pp. 2–21